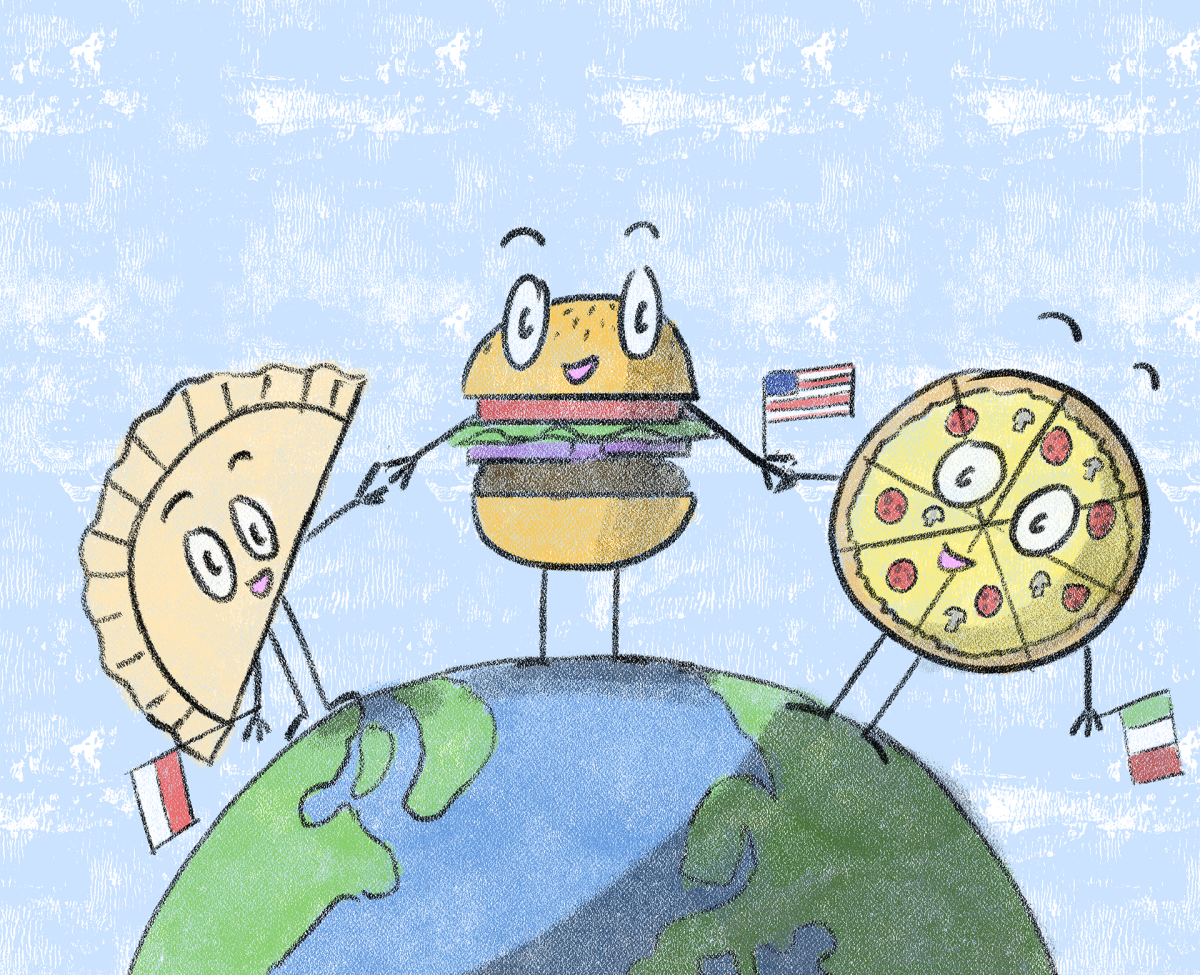

If you live in Austin, you might have seen Google’s self-driving cars driving around town. Austin is just one of four test cities for the “Self-Driving Project” — Google’s effort to create the fully autonomous vehicle. Likewise, Tesla’s cars also come equipped with “Autopilot,” an almost but not entirely autonomous driving experience. The newly-announced Model 3 already has 325,000 orders in one week’s time, which means that soon self-driving cars will be normal. And the rush to build autonomous cars doesn’t stop there, at least 25 companies, including even Uber and Apple, are purportedly working on them. This raises the question: How do we program these cars to deal with ethical dilemmas?

I’m sure you’ve heard of the “trolley problem,” where the driver of a trolley must decide which fork of a track to take — the track with five people on the rails or the track with one. If we alter this situation to include a self-driving car, say the choice between hitting and killing a person crossing the street or crashing into a wall to save the passerby but kill the car’s passengers, how do we decide what to do?

We don’t decide anything, the car does. The car’s programmers have to have previously programmed the car to make a “decision” in these situations. A moral algorithm must be created so that the car can act in such situations. The problem is that there is no obvious answer about what to do in these situations, and they can be become mind-bogglingly complex.

Take a situation with one passerby and one passenger. My gut says that the car should crash to save the passerby, because the passenger knowingly took the risk of buying an autonomous car. But what if the passerby is acting maliciously, say purposely getting in front of cars in order to crash and kill passengers? Then it seems obvious that the car should save the passenger. The best option changes under different circumstances.

Now, think of a situation with two passengers, an adult and a baby. My previous gut instinct to kill the passengers now seems wrong because it entails killing two people for the sake of one passerby. However, this includes not only weighing the quantity of lives against each other, but also the life’s potential. It is not readily apparent that two lives are worth saving over one, or that a baby’s life is more important than an adult’s.

Sheer probability guarantees situations such as these. Outside of the ethical problems, there are the added thorny issues of ‘algorithmizing’ morality, of weighing life’s value, and of hacking cars — the list is endless. Perhaps, with autonomous cars, we should learn how to crawl before we try to drive.

Bordelon is a philosophy sophomore from Houston. Follow him on Twitter @davbord.