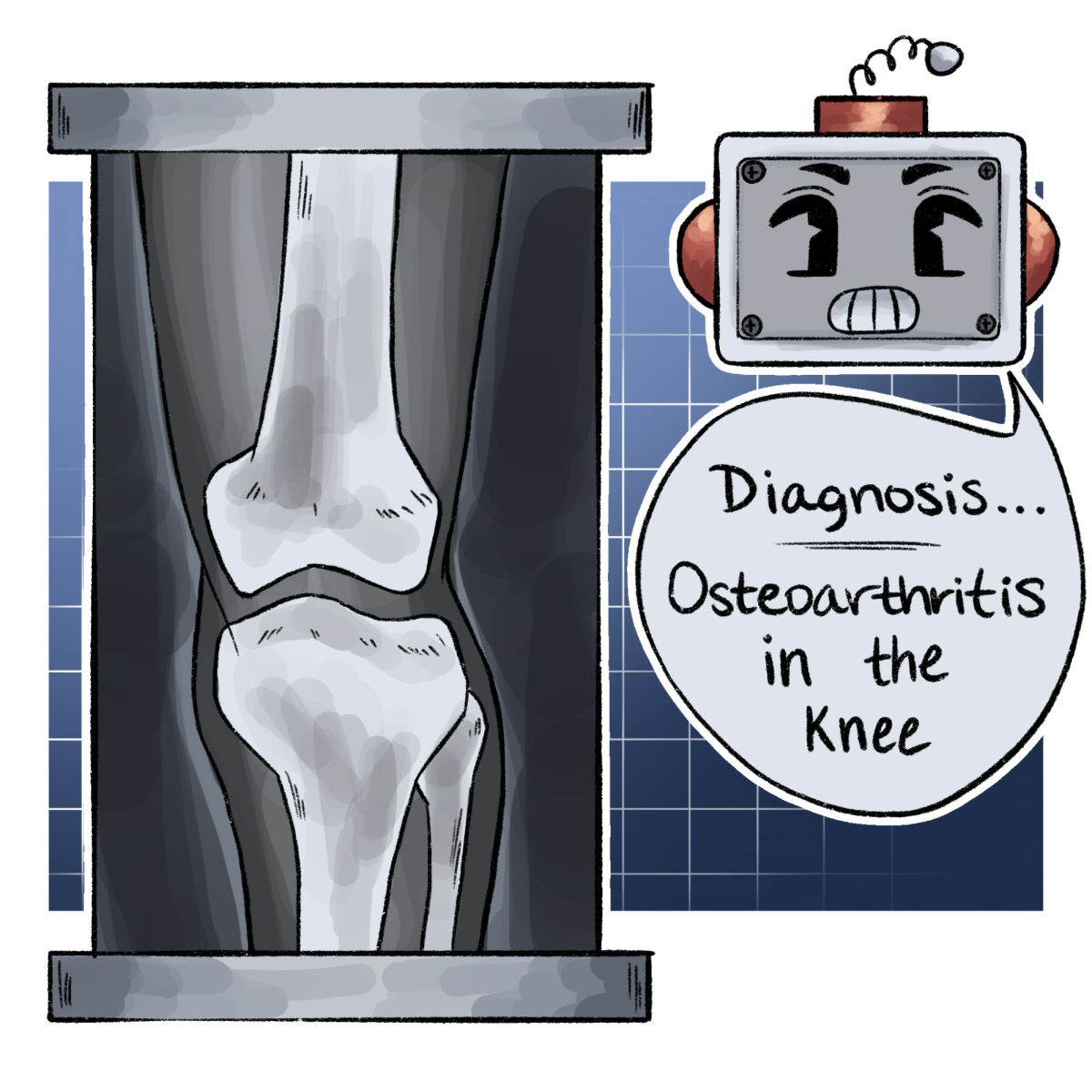

A team led by UT researchers developed an AI model to diagnose knee osteoarthritis based on medical images such as X-rays, to establish a scale of severity that can be used to diagnose individual patients.

The team used 40,000 images of knee joints in the UK Biobank to teach the AI and create a scale of severity for the diagnosis of knee osteoarthritis, a disease that results from the narrowing of the joint space between the femur and the tibia, said Vagheesh Narasimhan, co-senior author of the paper.

“One of the easiest and most clear evidence that a person has this is that the spacing between these two joints in your knee goes too close to zero or it gets smaller and smaller,” said Narasimhan, an assistant integrative biology professor. “We can directly look at this and compute this on the images. And so essentially, you can place people on a scale of the amount of knee joint space narrowing that they have, and this is a measure of severity of this disease.”

The team used the medical images to train the model to measure the space between the femur and tibia, said Emily Javan, co-first author of the paper.

“We trained a model based on a human saying, ‘This is how I want you to measure this space,’” graduate researcher Javan said. “And then we associated that space with, ‘Do you have osteoarthritis? Yes, or no?’ Which is a quantitative value that directly correlates with becoming a yes or no.”

The AI model was necessary to take measurements from the tens of thousands of images, something difficult for a clinician to do alone, said Brianna Flynn, co-first author of the paper.

“Deep learning is useful because we don’t necessarily want to sit and hand measure 40,000 images or however many medical images,” graduate researcher Flynn said. “But if you train a model, and you teach it pretty well, like, ‘Hey, these are these bones, and I care about the distance between these bones,’ the scale is limitless because you’ve taught something that can be done a lot faster, and so that’s the main purpose of the AI.”

The model has additional implications in measuring the effectiveness of the treatment post-diagnosis, Narasimhan said. For example, studying a patient from a medical image may have associations with their time to recovery.

“We’re planning to specifically look at patient-reported outcomes post-surgery,” Narasimhan said. “If we’re performing these diagnoses, but also measuring disease severity using imaging prior to the person getting surgery, does the severity of incoming illness or disease associate with outcomes that they’re seeing after surgical intervention?”

The AI model isn’t meant to replace clinician jobs, but rather assist in diagnosing within a clinical setting, Flynn said.

“This is going to be a great aid for clinicians to be able to do diagnoses and check where along their spectrum their patients lie,” Narasimhan said. “But also, just help them in terms of their time.”