UT researchers received a $2 million grant from the National Science Foundation on Sept. 1 to apply insights on how humans learn to create more efficient autonomous vehicles.

Principal investigator Junmin Wang said while autonomous robots like self-driving vehicles are helpful to society, their computational and energy efficiencies are low. He said they also aren’t able to easily adapt to and navigate complex environments as evidenced by the recent traffic jam caused by Cruise self-driving cars stopping at an intersection.

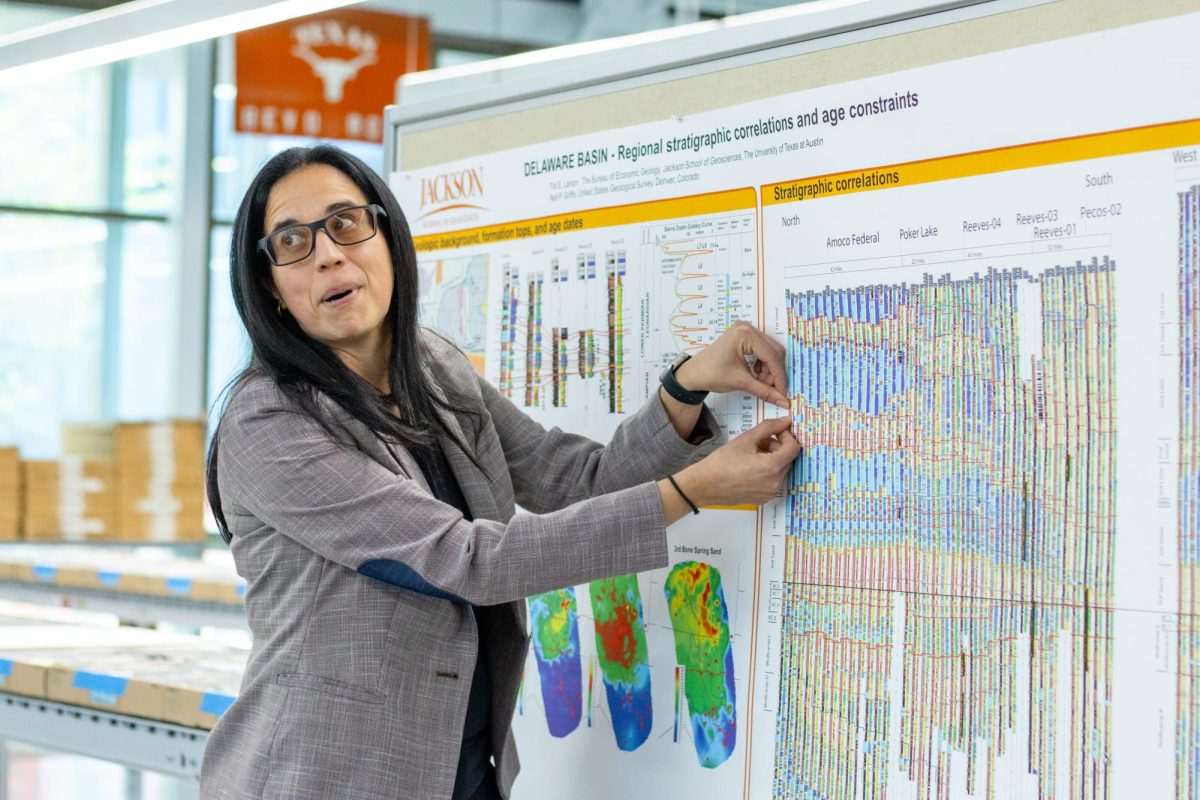

Co-researcher Mary Hayhoe said the team plans to use a driving simulator and track drivers’ eye movements to understand where people focus their attention while driving and how that changes over time. Then, the team will create software for autonomous robots inspired by these neurobiological insights.

Wang said when humans do routine tasks like driving and cooking, we develop contextualized memory as we learn to adapt to the environment over time. Applying this principle to autonomous vehicles will help them learn from experience and navigate roads in a more energy-efficient manner.

Hayhoe said selective perception is another key principle the team hopes to incorporate into their algorithms. Hayhoe said Light Detection and Ranging technology, which automated vehicles use to sense their surroundings, “continuously (gives) a depth map of everything, (which) is very different from human vision where we’re just attending to a little part of the visual field at any one moment.”

“If you think of biological systems, all animals (have) to get around and a lot of them use vision, and they do it really well,” Hayhoe said. “There’s clearly some aspects of biological vision that one could leverage.”

While autonomous vehicles take in all the information about their surroundings, Hayhoe said humans only focus on relevant pieces.

“In terms of information, you’re just updating a stored memory with little pieces of important information,” Hayhoe said. “Instead of trying to get everything about the world every few milliseconds, you remember what the world’s like and you just find the changes and update them.”

Wang said the research can also be applied to make robots more understandable to humans.

“If we can make (robots’ computation) in a way which is parallel or similar to how human brains work, then humans will better understand their behavior,” Wang said. “Therefore, robots can better collaborate with humans.”

Wang said he is especially excited about the multidisciplinary nature of the collaboration, as the team includes researchers from engineering, computer science, neuroscience and psychology backgrounds.

“I think this will enable us to (create) out-of-the-box and paradigm-shifting innovations to address some of the national challenges in robotic systems,” Wang said. “If you want to make those (autonomous) robots in the future as part of our society, we need to make humans and robots mutually understand each other in order to harmoniously work together.”