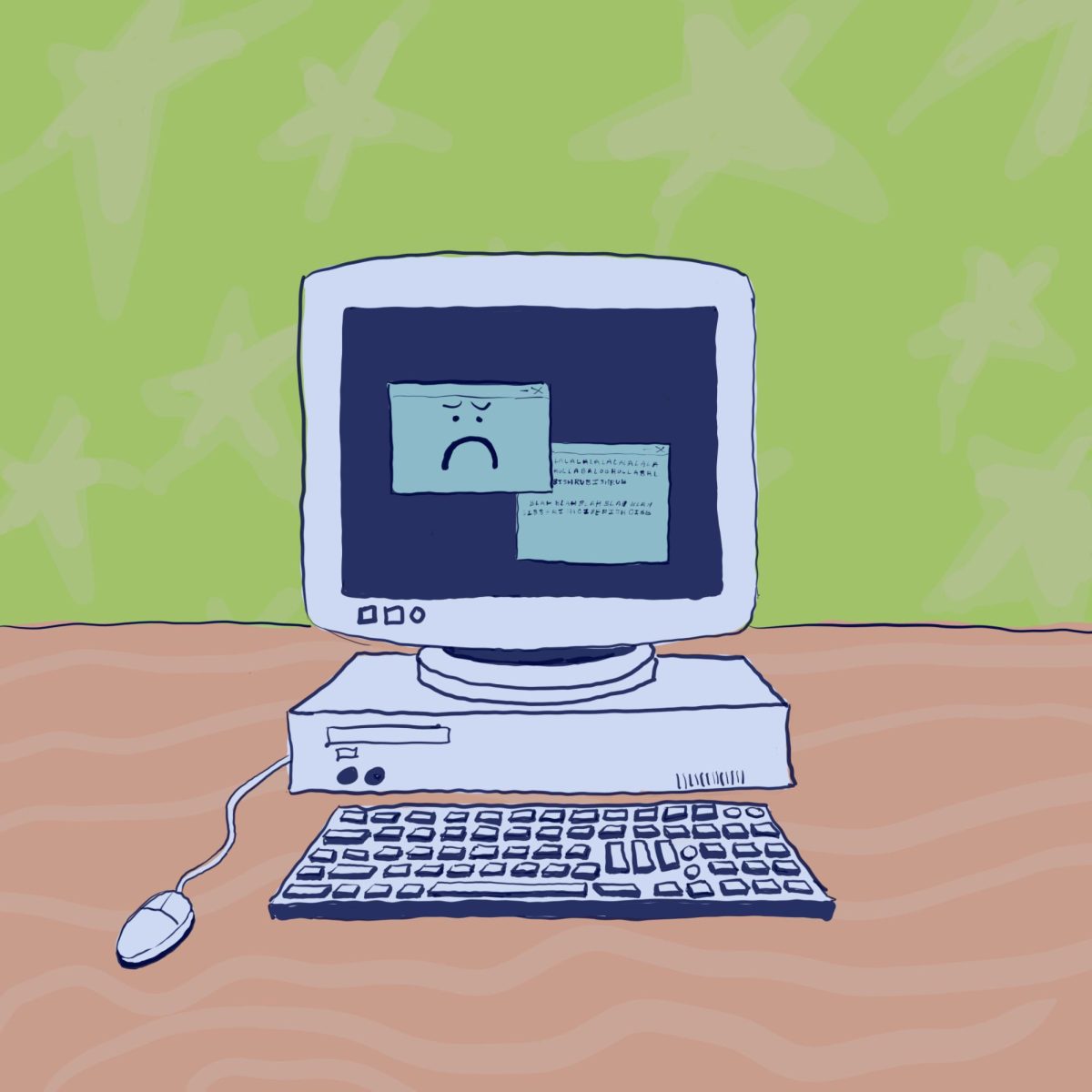

UT researchers, in collaboration with JPMorgan Chase, developed an algorithm teaching generative AI models to unlearn information in image-to-image models.

The paper describing the research, published on Feb. 2, details how generative models can be programmed to forget potentially harmful or unnecessary information. The algorithm is the first exploration of machine unlearning, specifically for image-to-image models, in which AI uses a source image to generate a target image with changes from the original chosen by the user.

Paper co-author Radu Marculescu said the algorithm is “counterculture,” because it teaches AI to forget unwanted information, as opposed to more common research that teaches AI to learn more information.

“(It’s about) learning only what you want the machine to learn, not everything,” said Marculescu, a professor in the electrical and computer engineering department. “Some contents need to be removed for obvious reasons, and (our) approach is one approach that does that very efficiently.”

Marculescu said the image-to-image model could remove unwanted objects from photographs, such as a handgun.

Guihong Li, a UT graduate research assistant and co-author of the paper, said the model could also filter out dangerous source images.

“(If) we want the model to generate an image, it’s possible that the model will generate something that is harmful,” Li said. “For example, a pornographic image or an image with violence, and that this is something we want to avoid.”

Co-author Hsiang Hsu said he hopes companies can use the model to protect the privacy of a company’s former clients. If a company possesses private information about a client who just left the company, this algorithm could force the model to forget that information and protect the security of the former client.

“Those images could be sensitive information about the users, like your face or the image of your house,” said Hsu, a JPMorgan Chase senior researcher. “Those are (things) that (are) very private, and we don’t want any of that information to be stored in a machine learning model.”