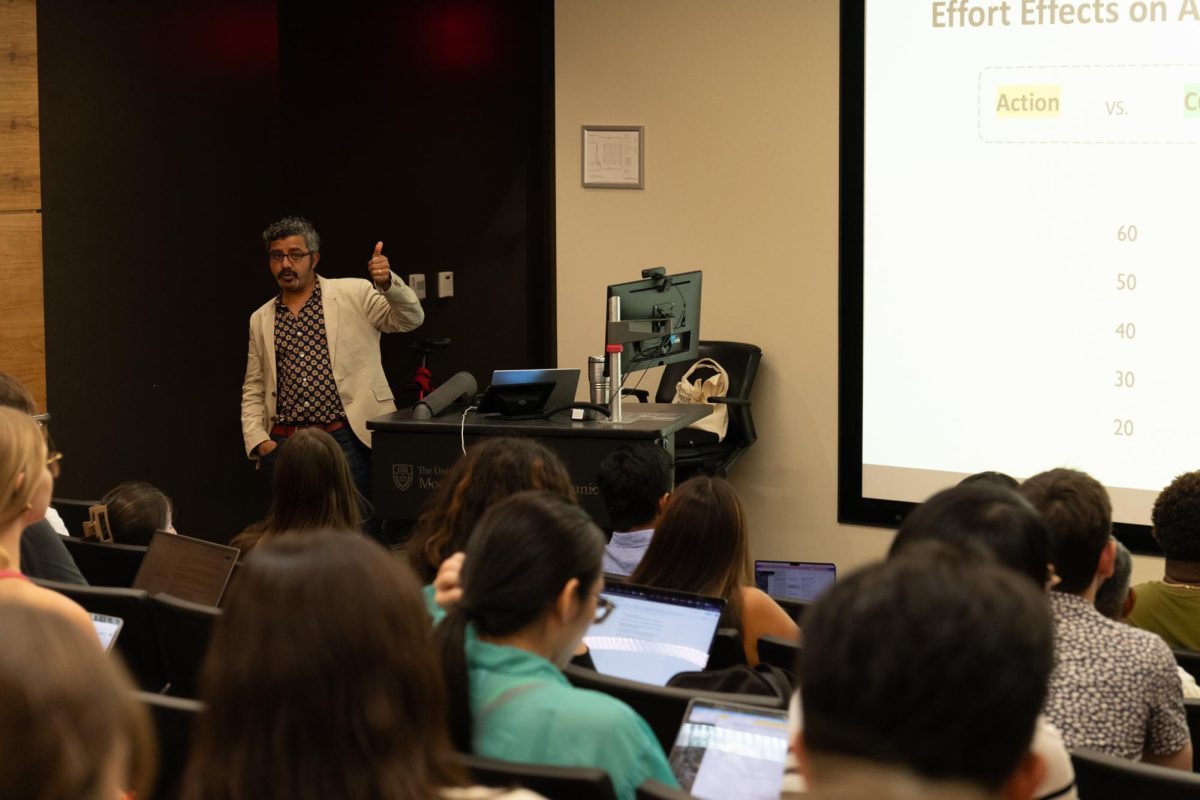

Socially responsible AI use has increasing importance amid the increase of generative AI use, Shyam Sundar said during the opening lecture of the Moody College of Communication’s computational social science speaker series on Sept. 20.

Sundar, who led the lecture titled Online Media Effects in the Age of AI, discussed the intersection of democracy with user interactivity on the Internet, social media and AI content moderation. Sundar, the director of Penn State’s Center for Socially Responsible Artificial Intelligence, said a key in increasing communication online, especially concerning mainstream issues like politics, lies in giving the average user a sense of “self-agency,” or allowing them to interact with platforms on their own terms.

Sundar said this sense of “self-agency” manifests in three features of interactivity: medium, message and source.

The medium feature allows users to click, scroll and slide on social media. Message features allow for interpersonal communication, when users become sources by writing, commenting and recommending content to other social media users.

Sundar said more cues, such as allowing comments on a post, allows for increased interactivity and open mindedness, decreasing political polarization. While increased interactivity can be positive, increased use of AI can invite negative exchanges online, he said.

“(This kind of research) can have practical implications for how platforms, even news vendors, design their interfaces and how they can show openness to communication to users in ways that might be more counterintuitive,” Sundar said during the lecture.

Sundar said sharing without clicking, or reposting an article without first reading its contents, constituted 75% of shares on Facebook. He said when people share videos without verifying their realness, it increases the amount of misinformation spread via AI-generated content like deep fakes.

“They’re standing behind (their statements) and retweeting (them), whether it’s accurate or not, and that’s a fairly dangerous type of phenomenon,” said Dustin Hall, a first-year masters student in the journalism research and theory track at Moody.

Sundar said social trust in AI grows with user feedback and verification features.

“Don’t hate AI blindly, don’t love AI blindly,” Sundar said. “Make decisions … (by) systematically processing what is good and what is bad about it.”