AI is often framed as a field dominated by engineers and computer scientists, leaving humanities students feeling like outsiders in conversations about technological innovation. But what if the success of AI relies just as much on those who study language, ethics and culture as it does on those who create code?

If AI is going to generate content that accurately reflects human experience, it needs input from people who understand the social and historical contexts of language. This is where humanities majors come in. Their training teaches them to analyze narratives and recognize biases — skills that are crucial in preventing AI from reinforcing stereotypes and misinformation.

“Humanities and social science majors get trained in thinking about the way that actual humans use language for different kinds of social goals,” said anthropology associate professor Courtney Handman, who is currently researching language models and AI. “These disciplines are really attuned to thinking about the different ways that language gets used, understanding fictional voices and different kinds of registers of speech.”

AI models don’t “think.” They generalize patterns based on existing datasets, many of which contain biases that can skew outputs in harmful ways. This includes regurgitating embedded discrimination.

“Recent AI models are centrally based on machine learning … (which is) a group of techniques that allows software systems to learn from data,” said Katrin Erk, linguistics and computer science professor. “It’s a very general technique that learns from examples. … Just by learning from gigantic amounts of data, AI can go and apply (what they learn) to a particular task, like ranking product reviews, but only after they’ve already trained to get a good sense of how human language works.”

For AI to interact with humans in an ethical and nuanced manner, there need to be people who are trained in language and culture contributing to AI advancement.

Humanities students often feel intimidated by the technical aspects of AI. Erk stresses that they shouldn’t be. What matters is building knowledge to engage critically with its output. Familiarizing yourself with how AI operates through coursework can help refine your skills that can be used in its development.

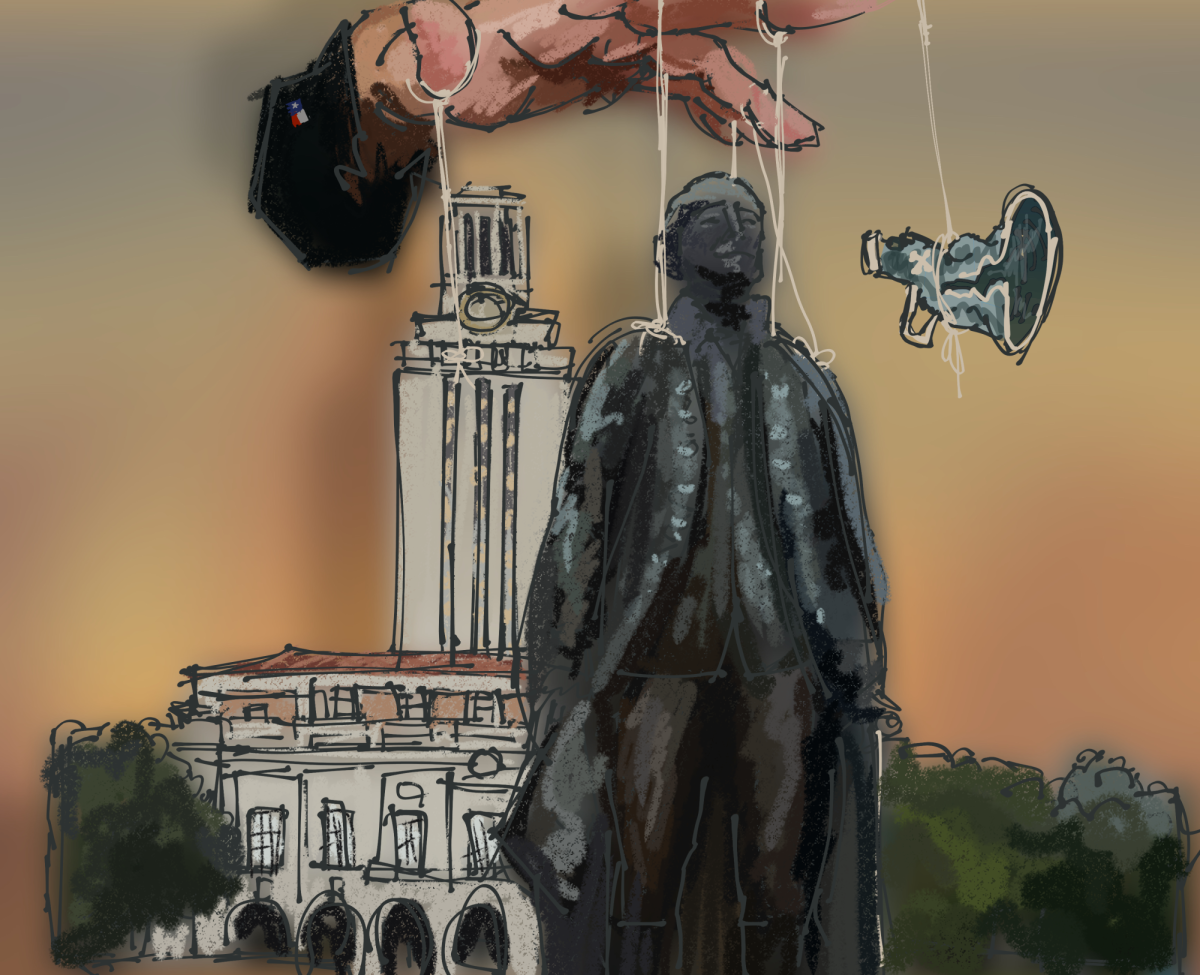

However, AI’s progress relies heavily on advancements in computer science and engineering. While ethical considerations matter, some may argue that AI’s evolution centers around mathematics and programming, making engineers essential and humanities scholars supplementary.

Even so, AI development isn’t just about writing better algorithms. It’s about taking those algorithms and making them work for all people. Without the insight of the humanities, AI runs the risk of becoming an unregulated, biased tool that amplifies existing inequalities. The success of AI depends on collaboration between technical experts and those who understand human culture. If we want AI that serves society rather than merely automating it, then humanities majors must not only be part of the conversation but actively shape its direction.

Huerta is a government sophomore from Victoria, Texas.